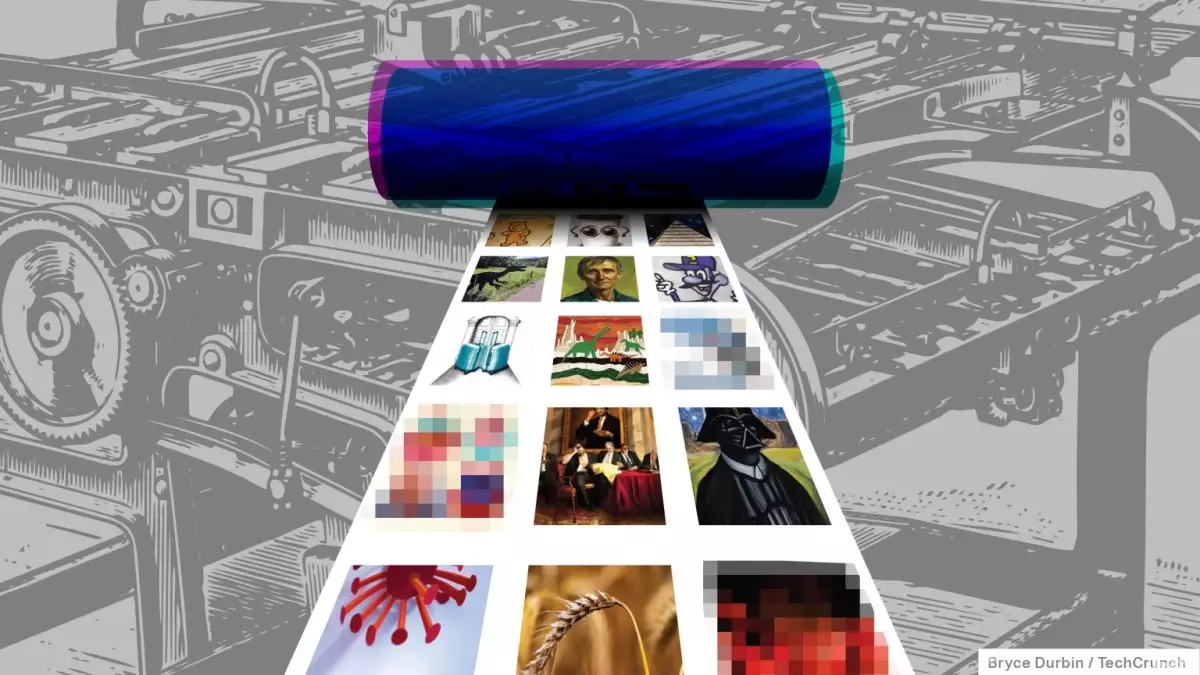

In the rapidly evolving landscape of artificial intelligence, the launch of new image generation models is often accompanied by both excitement and scrutiny. AI startup Stability AI recently unveiled its latest iteration, the Stable Diffusion 3.5 series, which promises enhanced customization and versatility. However, this announcement comes on the heels of previous controversies that have plagued the company, particularly surrounding technical challenges and licensing complexities. As AI systems increasingly permeate various industries, the need for robust, reliable performance and responsible usage frameworks becomes ever more paramount.

The Stable Diffusion 3.5 series comprises three distinct models—Stable Diffusion 3.5 Large, Stable Diffusion 3.5 Large Turbo, and Stable Diffusion 3.5 Medium—each tailored to different use-case scenarios. The flagship of the trio, Stable Diffusion 3.5 Large, boasts a staggering 8 billion parameters, making it the most capable model for generating high-resolution images, up to 1 megapixel. As a rule of thumb, models with a higher number of parameters tend to exhibit better problem-solving capabilities and performance, which is essential for users requiring high-fidelity outputs.

For enterprises and developers prioritizing speed over quality, the Stable Diffusion 3.5 Large Turbo serves as a distilled alternative, generating images at a faster pace. Meanwhile, the Stable Diffusion 3.5 Medium model is optimized for edge devices, such as smartphones and laptops, enabling users to generate images with resolutions between 0.25 and 2 megapixels. This diversity in offerings reflects Stability AI’s commitment to accessibility, allowing a broader audience to leverage advanced image generation capabilities.

One of the critical selling points of the new models is their potential for generating more diverse outputs. Stability AI asserts that these models can produce images reflecting a broader spectrum of skin tones and features without necessitating extensive prompting. In a recent interview, Hanno Basse, the Chief Technology Officer of Stability, explained that training included multiple prompt variations, enhancing the model’s ability to understand and represent varied concepts.

Despite the optimism around this feature, caution must be exercised. Past attempts by other companies to diversify AI outputs have led to public backlash and misinterpretations. For instance, Google’s Gemini chatbot faced significant criticism for portraying inappropriate representations in historical contexts. Therefore, whether Stability’s approach to diversity is executed with the necessary sensitivity and thoughtfulness remains to be seen.

Despite the upgrades, the Stability team is upfront about potential challenges. Previous models, particularly the Stable Diffusion 3 Medium, have faced observation of peculiar artifacts and inconsistencies in adhering to user prompts. The company advises that while the new models are designed to improve quality, users might still encounter errors. Engineering and architectural decisions could result in varying quality outputs based on prompt specificity, with a broad range of creative variations generated for the same input.

The crux of the matter is balancing innovation with user expectations. Stability AI emphasizes the need for detailed prompting to mitigate uncertainty in outputs. As the field of AI image generation becomes increasingly competitive, the challenge lies in offering models that deliver consistent performance while addressing user demands for creativity and utility.

A notable aspect of Stability AI’s operation is its licensing model. The Stable Diffusion 3.5 series remains accessible for non-commercial use, with adaptations for small businesses—those generating under $1 million in revenue—allowed without fees. However, companies exceeding this threshold face the necessity of securing an enterprise license, a stipulation that has drawn attention given the company’s earlier restrictive terms regarding model fine-tuning.

The ongoing discourse surrounding copyright in AI training data also plays a significant role. As AI continues to derive insights from an expansive pool of online content, concerns regarding ownership and originality have escalated. Stability AI maintains that it operates under the fair-use doctrine, although this legal shield is increasingly challenged, leading to class-action lawsuits initiated by artists and data owners whose works have been utilized without consent. The evolving legal landscape raises critical questions about the future of AI training methodologies, urging companies to refine their practices further.

Stability AI’s introduction of the Stable Diffusion 3.5 series is a notable step in the advancement of image generation technology. However, the company must navigate a complex web of expectations, ethical considerations, and potential legal challenges. As both enthusiasts and skeptics observe closely, Stability AI’s commitment to diversity, quality, and accessibility will define not only the user experience but also the broader conversation on AI’s ethical use and societal impact. With the upcoming release of the medium model, industry stakeholders are keen to see whether Stability AI can deliver on its ambitious promises, as the future of AI image generation increasingly hinges on responsible innovation.