In recent years, artificial intelligence (AI) has witnessed remarkable advancements, yet robotics, particularly in industrial settings, has lagged significantly in terms of functional intelligence. The robots deployed in factories and warehouses are generally programmed to execute predetermined tasks in a highly synchronized manner. This rigidity represents not only a limitation but a barrier to broader applications. These machines often lack the capacity to intuit their environments or adjust their actions flexibly, making them ill-suited for more dynamic scenarios that require quick decision-making and adaptability.

Limits of Dexterity and Perception

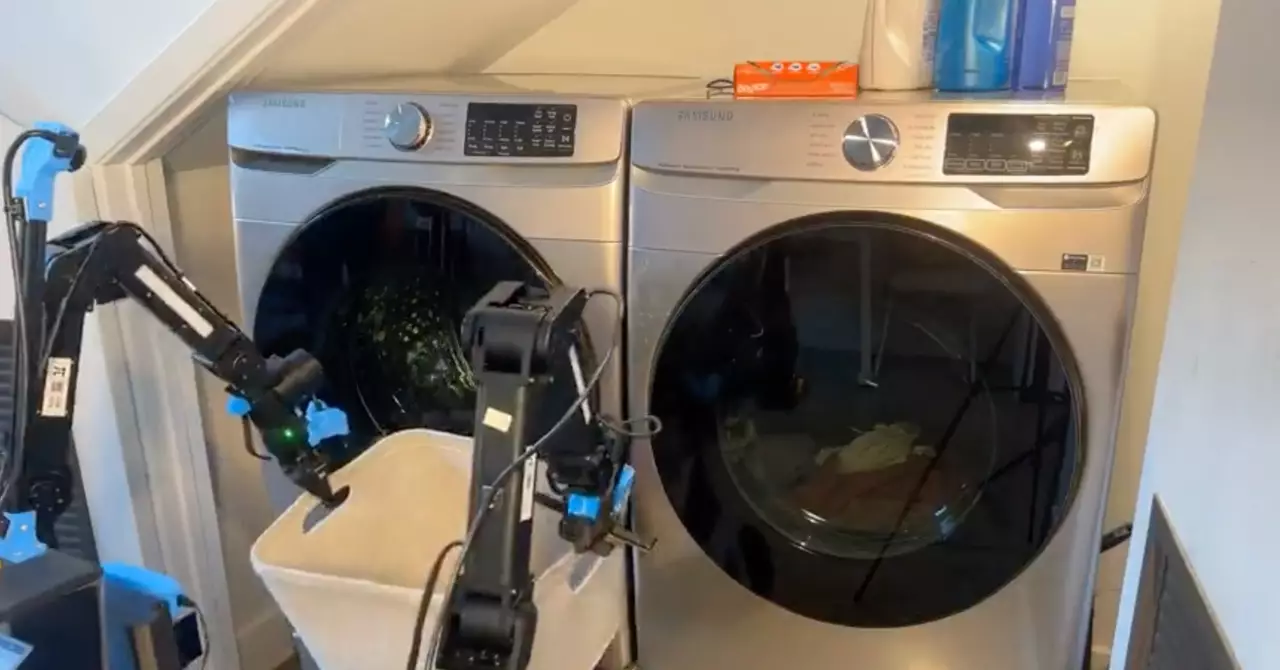

While there exist robots capable of object recognition and manipulation, their skills remain severely constrained. The absence of general physical intelligence significantly affects their efficiency, as they can perform only a narrow set of tasks with minimal finesse. The industry would greatly benefit from robots that can undertake a diverse multitude of tasks with little guidance. By facilitating a greater level of adaptability, such robots could revolutionize various sectors by handling jobs that range from minor household chores to complex assembly tasks in manufacturing.

A surge in enthusiasm surrounding AI breakthroughs has ignited hopes for parallel advancements in robotics. High-profile projects like Elon Musk’s humanoid robot, Optimus, have amplified these aspirations, with projections suggesting availability at a consumer-friendly price by 2040. Musk’s vision implies a robot capable of executing an extensive range of activities, fundamentally altering the landscape of human-robot interaction. However, realizing this vision relies on overcoming the current barriers to versatility.

Learning Across Tasks: A Paradigm Shift

Traditionally, robotic training methodologies concentrated on singular machines mastering isolated tasks, often resulting in limited transferability of learning. However, recent research has reshaped this outlook, demonstrating that robots can indeed acquire skills across various tasks when sufficient data and adaptive techniques are employed. A groundbreaking initiative from Google in 2023, referred to as Open X-Embodiment, showcased this potential by facilitating knowledge sharing among 22 robots across 21 labs. This collaborative learning approach may hold the key to broadening the skill sets of future robotic systems.

Despite the promise of these advancements, a persistent challenge remains: the limited availability of high-quality data for training robots compared to the vast amounts of text-centric training data available for large language models. Companies like Physical Intelligence have taken the initiative to generate their own datasets and innovate learning techniques to optimize the training process. By integrating vision-language models with diffusion methodologies (originally used for AI-generated images), researchers are striving to create a more holistic learning framework.

The Path Forward

For robots to effectively manage any task assigned by a user, significant escalations in both the scale of learning and the types of tasks robots can perform are essential. Acknowledgements of the ongoing journey are vital, as noted by a key figure in the industry, who emphasized that current developments serve merely as a scaffolding for future capabilities. Understanding these complexities will be crucial in overcoming the hurdles that still lie ahead in the quest for more sophisticated and dexterous robotic companions. The intersection of AI and robotics holds immense potential, but navigating the bridge between current limitations and future possibilities will require sustained effort and innovative thinking.