As artificial intelligence (AI) continues to weave itself into the fabric of everyday life, questions arise about the quality of the information that fuels these technologies. One prominent issue is the reliance on flawed datasets, particularly those pertaining to intelligence quotient (IQ) measurements. This is especially pronounced in the controversial work of Richard Lynn, who has constructed a “national IQ” database. Critics argue that the methodology and data quality behind Lynn’s work are unsound, leading to serious repercussions, including the propagation of racist ideologies within academic circles and beyond.

The crux of the issue lies in the fundamental question of reliability. Lynn’s datasets are characterized by minimal sample sizes and selectivity that undermines the credibility of his findings. For instance, a claim made regarding the national IQ of Angola is drawn from just 19 individuals—an absurdly small pool from which to draw any legitimate conclusions. Similarly, the IQ scores attributed to Eritrea stem from children residing in orphanages, raising concerns about representativeness and bias. This not only reflects poor academic rigor but also casts doubt on the integrity of the results being published.

Critics, including scholars and researchers who have analyzed Lynn’s work, characterize the underlying science as fundamentally lacking. As pointed out by experts, the methodology employed in selecting these samples steers the data toward outcomes that appear predetermined, particularly when assessing populations from the African continent. This systematic bias is a crucial factor in understanding the pitfalls of relying on Lynn’s data for assertions regarding intelligence across racial or national lines.

The Ideological Implications of Flawed Research

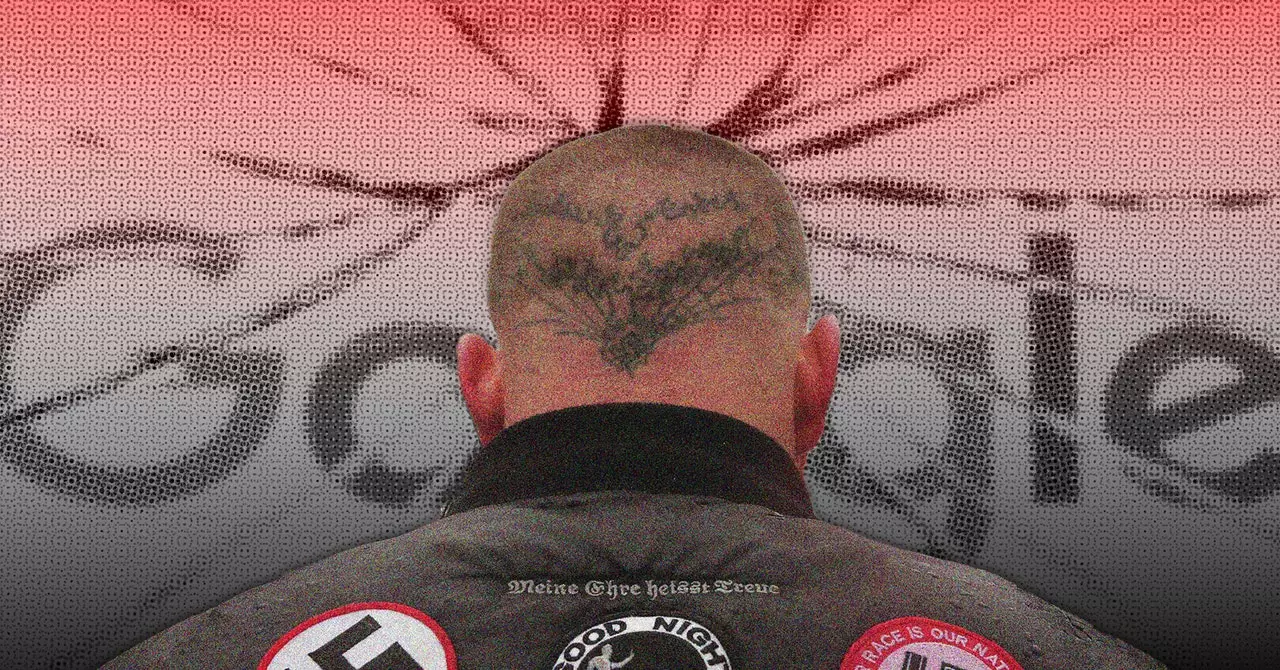

Unfortunately, the repercussions of Lynn’s research extend far beyond academia. His datasets have found their way into the hands of far-right groups, fueling arguments of racial superiority. This phenomenon epitomizes a crucial concern: when flawed data enters mainstream conversation, it breeds misinformation and reinforces harmful stereotypes. The misuse of Lynn’s work promotes a worldview that simplistic measures of intelligence can define the worth of entire populations.

Mapping national IQs has become a tool for ideological manipulation, with visuals that starkly contrast sub-Saharan nations with purportedly low IQs against Western countries often labeled with higher scores. Such visualizations, which proliferate on social media, are not merely innocuous representations; they serve to legitimize and perpetuate innate bias against certain racial and national groups.

While the AI systems that draw on these datasets receive scrutiny, it’s imperative to consider the scientific community’s culpability in this narrative. Critics argue that Lynn’s work has been cited without appropriate skepticism, paving the way for flawed notions to be treated as gospel truth. This lack of critical engagement has allowed misleading studies to circulate unchecked within academic literature, suggesting a handshake between academia and right-wing ideologies.

The numerous citations of Lynn’s work over the years underscore a broader systemic issue: the failure of the scientific community to hold flawed studies to a higher standard of scrutiny. As Rutherford points out, the resilience of Lynn’s work within academic discourse reveals a disconcerting trend of uncritical acceptance that enables erroneous data to imbue public perception and policy-making.

The interplay between data collection, presentation, and interpretation in the field of intelligence measurement is riddled with complexities that demand attention. Not only must researchers adopt rigorous methodologies to ensure data integrity, but they must also navigate the moral responsibility that accompanies their findings. As society increasingly relies on AI for decision-making, the reliance on flawed information—as seen in Richard Lynn’s controversial databases—raises alarms about the potential consequences.

Ultimately, the future of AI technology should not be built upon the shaky foundations of dubious research; rather, it must advocate for transparency, accountability, and a steadfast commitment to ethical standards in data utilization. Only through a concerted effort to challenge and refine the underlying data that informs AI systems can society hope to foster a more informed and equitable discourse on intelligence and its implications.