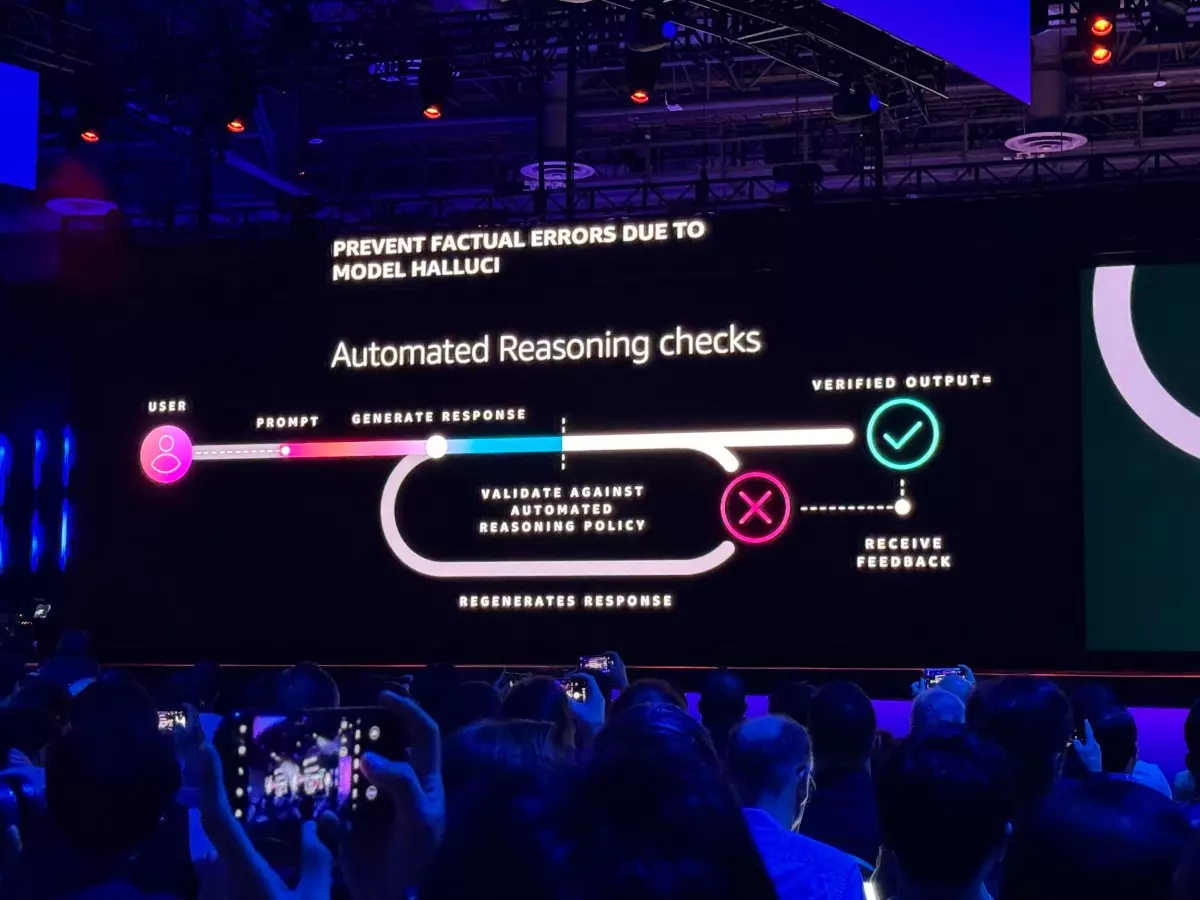

At the recently held re:Invent 2024 conference, Amazon Web Services (AWS) unveiled a suite of innovations aimed at addressing a critical issue within the artificial intelligence sphere: the phenomenon of hallucinations. Hallucinations refer to instances where AI models make inaccurate predictions or generate misleading outputs, raising concerns about the reliability of AI systems in real-world applications. AWS introduced its new tool, Automated Reasoning Checks, which seeks to counteract this challenge by employing a method that cross-references model responses with customer-supplied information for accuracy verification.

In a market that has become crowded with similar tools, AWS’s crowning claim is that it is the “first” and “only” solution of its kind. However, it is essential to scrutinize this assertion. The Automated Reasoning Checks bear striking similarities to a feature released by Microsoft earlier this year. Microsoft’s Correction tool also aims to identify and flag potentially erroneous AI-generated content. Furthermore, Google’s Vertex AI platform has introduced its own mechanisms allowing users to “ground” AI outputs by utilizing vetted data from various sources. Given this landscape, it is important to critically assess whether AWS’s entry into this space genuinely provides unique value or merely aligns with existing solutions.

The inner workings of Automated Reasoning Checks hinge upon a systematic method wherein customers provide foundational data to establish a “ground truth.” By analyzing how an AI model formulates its output and contrasting it with this verified dataset, AWS aims to identify inaccuracies. When a model generates a response, the system evaluates its veracity, presenting a corrected answer along with the original output in cases of probable hallucinations.

This service is delivered through AWS’s Bedrock model hosting platform, specifically integrated with its Guardrails tool. Such a structure allows customers to fine-tune the rules governing the AI model’s behavior, adapting its responses based on rigorous verification processes. However, the issue at hand is that while this service can enhance accuracy, it does not eradicate hallucinations entirely. Many experts argue that hallucinations are inherently tied to the way AI systems operate. As statistical models that predict based on patterns, AI cannot “know” in the human sense but instead synthesizes responses based solely on prior data encounters, making complete accuracy elusive.

AWS’s recent innovations also include Model Distillation, a tool designed to transfer capabilities from larger models to smaller, more efficient counterparts. This feature is crucial for organizations aiming to balance performance with cost-efficiency. While it mirrors Microsoft’s offerings in AI model scalability, AWS claims that its platform will automate the refinement process, requiring minimal manual input.

However, the caveats cannot be overlooked. Currently, Model Distillation is limited to AWS-hosted models from specific providers, necessitating that large and small models are selected from the same family. Moreover, a slight reduction in accuracy is expected. The scant flexibility may deter some potential customers who seek a broader array of options for model optimization.

Meanwhile, multi-agent collaboration has also emerged as a part of AWS’s Bedrock toolkit, where AI agents can be delegated specific tasks within larger projects. The introduction of a “supervisor agent” to oversee task delegation and coordination appears promising. Nonetheless, as is the case with AWS’s other offerings, the real test lies in the practical implementation and efficacy of these systems in real-world scenarios.

Despite these advancements, the inherent challenges associated with AI hallucinations remain a significant hurdle for AWS and the industry as a whole. Achieving complete accuracy in AI-generated information is a monumental task, as models inevitably deal with incomplete or imprecise data. The journey towards eliminating hallucinations is likened to trying to extract hydrogen from water, highlighting the complexity of the issue.

AWS’s claims of utilizing “logically accurate” and “verifiable reasoning” have yet to be substantiated with empirical evidence. Without demonstrating the effectiveness and reliability of Automated Reasoning Checks, establishing trust with potential clients may be difficult. As businesses increasingly adopt generative AI, they will look for solutions that not only promise reliability but also deliver tangible results in minimizing errors.

As AWS continues to innovate within the cloud computing space, the introduction of Automated Reasoning Checks and other tools indicates its commitment to addressing the inconsistent reliability of AI systems. However, the path ahead is fraught with challenges, including proving the uniqueness of its offerings and the effectiveness of its solutions in internal and client-facing applications. Without transparent data demonstrating success, AWS’s position as a leader in this complex market will remain in question. The company must navigate these critical moments not just with technological advancements, but with robust evidence of reliability and innovation that stands apart from its competitors.